AnimateDiff is a solution to animate pictures that is available for different interfaces.

It is a tool that allows to animate a picture that has been created in an AI environment and it can be used in different ways.

Before I wrote this tutorial, I wanted to compare AnimateDiff for Automatic1111 with DiffEx, a standalone interface to use AnimateDiff that is also able to do picture generation.

I wanted to say that DiffEx is much more convenient to use, but by trying to reproduce in one software what I was doing with the other, I noticed that their fonctions don't match exactly.

Also my own comprehension of Automatic1111, DiffEx and AnimateDiff is limited, I said it modestly.

AnimateDiff seem to be something to experiment with, instead something you have to be too theoretical about.

Since it was anyway impossible to speak about these versions of the software without giving any examples. I am going to explain how to do something that can be useful for a kind of video game.

This is going to be a series in 3 parts, because it seems like you can also use AnimateDiff in ComfyUi, another interface to use picture generation models.

So AnimateDiff for Automatic1111 is not really a novelty, you can find videos and tutorials about that elsewhere.

So I followed this kind of tutorial on YouTube and I installed this extension before, until my own automatic1111 (that I call often abusively, "Stable Diffusion") became a bloatware and I reinstalled everything.

When I reinstalled AnimateDiff, I felt it was tedious and I even wanted to praise DiffEx because it was much easier to install...

...Well DiffEx (that I like), IS easier to install but not that much, if you make it rational.

Let's install AnimateDiff for Automatic1111

(This explains what you have to do if you have already stable-diffusion on automatic1111 installed, if not read here.

As i said it before, i tend to call abusively Automatic1111, "stable diffusion", while it is just one interface of it)

- Go to the extensions tab and select "install from url"

and enter (first line):

https://github.com/continue-revolution/sd-webui-animatediff

Then install.

Once it is installed, go to "installed", click on "apply and restart UI".

AnimateDiff requires a specific ckpt file to work.

The kind of file that is sometimes used by stablediffusion itself. Go to:

https://huggingface.co/guoyww/animatediff/tree/main

You need one of those file.

Personally, because i like to do like the others, i am using: mm_sd_v15_v2.ckpt

Note that this with not work if you generate the picture with SDXL. In this case, you need, for example: mm_sdxl_v10_beta.ckpt.

Download this file and put it in this directory:

stable-diffusion-webui\extensions\sd-webui-animatediff\model

Now, let's also install controlnet directly, because it is more convenient to do it now and because you need a bit more than just AnimateDiff.

How to install Controlnet for Automatic1111?

Like before, install ControlNet from the extension menu, by using this url: https://github.com/Mikubill/sd-webui-controlnet

and like before, restart the interface.

You need then additional files that are like modes to controlnet.

https://huggingface.co/lllyasviel/ControlNet-v1-1/tree/main

Personally i have:

control_v11p_sd15_canny.pth, control_v11p_sd15_lineart.pth and control_v11p_sd15_openpose.pth

They have to be put in: stable-diffusion-webui\extensions\sd-webui-controlnet\models

Basically controlnet is very useful to control (and copy) poses.

In the txt2img panel, there is now a new "controlnet" options panel.

But we are not going to use controlnet directly...

So let's say, you want to create a visual novel...

A character is speaking in front of You, it is a sphinx woman, in an egyptian setting, she is attractive and she is asking you to solve a riddle.

You want the picture of her character to look more real than real.

You want to give some life to a character, therefore you are going to generate a little animation instead of just a creating still picture

It is perfectly possible to use AnimateDiff to animate a character that has been generated by AI.

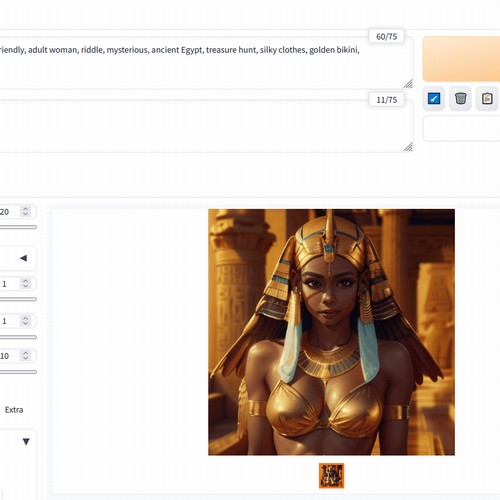

So i start first to create a single picture with these keywords:

hyperrealism, Sphinx, wings, Photographic, cinematic photo, film, professional, 8k, highly detailed, golden jewelry, tanned, georgeous, brunette, speaking, welcoming, adult woman, riddle, mysterious, ancient egypt, treasure hunt

And for the negative prompt, i ll go for "easynegative" as the only negative prompt:

You have to install this to use this negative prompt:

https://civitai.com/models/7808/easynegative

And that should give you a "easynegative.pt" file to put here: stable-diffusion-webui\embeddings

For the idea, i'll use DreamShaper 8

https://civitai.com/models/4384?modelVersionId=128713

(You can probably use another model, i put this information for the sake of reproducibility).

Be warned that this model could generate some nsfw content and should only be used by responsible adults.

By tuning the keywords, i found that these settings give me something interesting:

hyper-realism, Sphinx, wings, Photographic, cinematic photo, film, professional, 8k, highly detailed, golden jewelry, tanned, gorgeous, speaking, friendly, adult woman, riddle, mysterious, ancient Egypt, treasure hunt, silky clothes, golden bikini, magnificent, indoors, temple,

Negative prompt: easynegative, ugly face, nude, nsfw

Steps: 20, Sampler: DPM++ 2M Karras, CFG scale: 10, Seed: 4068673553, Size: 512x512, Model hash: 879db523c3, Model: dreamshaper_8, Denoising strength: 0.7, Hires upscale: 2, Hires upscaler: Latent, TI hashes: "easynegative: 66a7279a88dd", Version: v1.7.0

Once, i have the basic picture, i make the animation with AnimateDiff.

So i check "Enable AnimateDiff", number of frames:16, fps:16

To get a closed loop in AnimateDiff (what we want), check "A".

If you want to know what the other letters do, go to this page:

https://github.com/continue-revolution/sd-webui-animatediff#webui-parameters

and read the 6).

Note that you dont have this option to create loops with DiffEx but you can perfectly do loops anyway. You just have less control for that.

Because i don't need many frames. For testing purpose, it is anyway better to keep the number low (16 is usually fine).

It can of course be adapted as you wish.

You could check "Hired. fix" because this resampling may improve the quality of the pictures, but it also reduce the generation speed.

At the end i get a animated GIF with these settings:

hyper-realism, Sphinx, wings, Photographic, cinematic photo, film, professional, 8k, highly detailed, golden jewelry, tanned, gorgeous, speaking, friendly, adult woman, riddle, mysterious, ancient Egypt, treasure hunt, silky clothes, golden bikini, magnificent, indoors, temple,

Negative prompt: easynegative, ugly face

Steps: 20, Sampler: DPM++ 2M Karras, CFG scale: 10, Seed: 4068673553, Size: 512x512, Model hash: 879db523c3, Model: dreamshaper_8, AnimateDiff: "enable: True, model: mm_sd_v15_v2.ckpt, video_length: 16, fps: 8, loop_number: 0, closed_loop: A, batch_size: 16, stride: 1, overlap: 4, interp: Off, interp_x: 10, mm_hash: 69ed0f5f", TI hashes: "easynegative: 66a7279a88dd", Version: v1.7.0

- At the end i get this:

(click on the picture to see the little animation)

Would it be a good animated image for a visual novel ?

I think yes.

Now let's see one of the main usage of ControlNet with AnimateDiff:

The ability to copy the movements of a character in a video to use it as a reference to create a new video.

So we can keep all the settings above, but we will now use controlnet additionally.

How to use AnimateDiff with ControlNet?

I downloaded and loaded, this video: https://pixabay.com/videos/santa-claus-father-christmas-80704/

in AnimateDiff. Put the fps (frame per second) a 8. When the Fps is faster than 8, the video seems to go too fast.

For test purpose, i'll always select 16 for the "number of frames". It is the minimal value if "context batch size" is 16 (which is more or less what works the best).

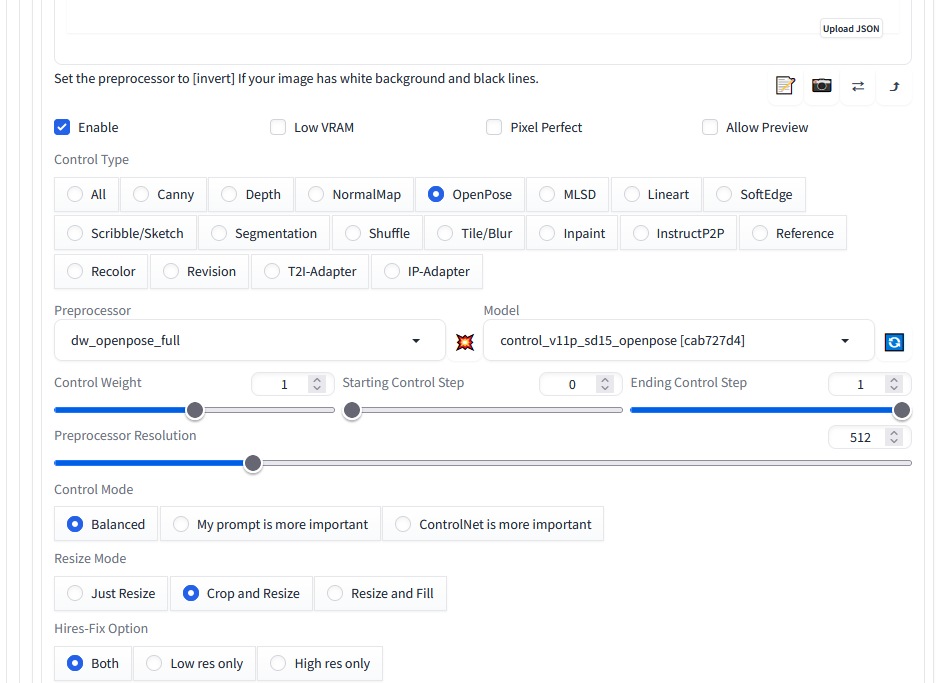

So now hat you have ControlNet installed: Enable ControlNet, select OpenPose, preprocessor: "dw_openpose_full" and model "control_v11p_sd15_openpose".

You can play with Control Weight, among other things.

At the end, by trying new seeds and by finally changing the number of frame to 80 (8x10sec=80), i get this resulting Gif:

The resulting video (here as webm) is in my opinion, not ugly, not perfect, doesn't deserve any defined purpose (it is a demo), is probably already a bit too long but it could be, for example, a part of a clipshow and could therefore be useful for something, that's why i stopped to improve it further (meanwhile i also did better with DiffEx).

And remember that this gif has been generated thanks to the rythm of this character: https://pixabay.com/videos/santa-claus-father-christmas-80704/

If the body shape of my Sphinx had matched the body shape of my Santa, the result would have been better. The GIF has a small problem of body proportion and a LORA dedicated to correct this problem could also be useful.

Note that you can load these GIFs in the PNG info panel of Automatic1111 and retrieve the data with which the GIF has been generated.