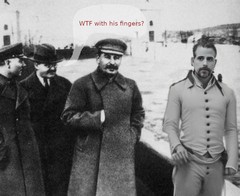

If you click on the thumbnail above, you will find a picture that is obviously not a real picture. I used the img2img tool of Automatic1111 to generate it and the gimp to correct the limit of a character.

The raise of a technology that can alter the perception of reality, raises usually concerns about it's misuses.

Are you actually able to spot fake pictures ?

Here are 2 games where you can test you skills:

https://ai-or-human.github.io/

https://www.caitfished.com/ (was offline the last time i tried, but was online before)

While some pictures look instinctively fake, it is may be harder to tell if a picture is objectively fake.

- For example, for the picture above, one way to tell, is to notice that it is a modified version of another picture with a cultural significance.

- Another one is to notice that real characters usually don't use speech balloons... sadly this is a bit trickier, because, you can then detect that a picture has been tailored, but that doesn't mean that the picture behind wasn't true.

- There is a problem with the noise of the picture: it is not uniform.

- Also, you have the fallacy: "argumentum ad molotovum" 🙄. The picture is wrong because no true Molotov (replace Molotov by the category you want) would say that. Sadly, history has proven that some people are from time to time put arbitrarily in the wrong category.

- Finally, since the picture above shows the sign of something that has been generated by AI (the bad fingers), you could just induce that the picture is likely wrong. Sadly, one day some people are going to come to you with a sad face to explain to you that thalidomide was a real thing and that it is "disabilist" to assess that actually PEOPLE are fake just by counting their fingers. Therefore it should be illegal to assess that pictures are fake just by counting their fingers (can you see how fast I can slide from "genuine concern about other people's problems" to "control freak about freedom restrictions"), and therefore a Kommisar of censorship (I find the link ironic in so many ways) should be nominated. Anyway, we can expect generative AI to be more accurate with fingers...

Also while I think about it, a picture doesn't need to be fake to be misleading. Take some random picture, give it arbitrarily a name, like for example the name of a deity and the media can provide content thanks to it for 10 years (Find the English page and try to compare, to see what pictures are used and in what categories are the English and the Arabic articles). And no, it is not linked to only one religion... (or here)

(Here i comment on the society, scroll down if you just want a list of tools to detect fake pictures)

It looks like we have now to set what is the truth by detecting the fake things [produced by the bad guys], if not the society deserves to be censored (to protect the citizens ?), while i don't think it matters that much (it is a catch 22).

I think, the occidental world lives in a period when censorship is the solution of a problem: "freedom of speech" and we can just expect some scandals to happen to be the next nail into the coffin of freedom. You know this thing that is dangerous when other people have it?

Do I misuse the concept of cultural determinism if i assess that more "social theories" (can be whatever you want) are promoted to students, more it advertises the topic to the students? While, the more this topic doesn't have any obvious useful application and more it is costly to study, then more it pushes the students to accept social structures that make their diplomas actually useful (why not as "Gatekeeper of the truth", to keep the mainstream culture free of "undesirables" [other example], in a very serious company or in an administration? To track down the false information of the opponents [and less the information of your own political side]).

What i want to say is that nowadays, AI or not, fake news or fake pictures in the media are not so much a new problem (see the picture with Stalin): if only a few people can forge pictures, then the fake pictures are more trustworthy. But if many people can forge pictures, then people should also be more cautious about fake pictures and trust them less. I think that fake pictures are going to push people to believe more what they already believed before, because it gives a reason to doubt about any pictures.

What makes censorship more attractive, AI or not, is that it can give a job to some people with a degree of social science, for example because of the expertise they claim to have. It can give a job to a lawyer (what is pushed in the USA is pushed later in the rest of the western world). It gives content to media, to politicians, to "experts". It helps companies to look like they have a soul, while they just try to match what they think the modern world is. It helps to renew the content of the cinema industry by rebooting some licenses. It even pushes the censored groups to play the underdog ("oh, look at me, i am censored * sob * * sob * here is the link to the place where you can donate money" [but you still see them] )...

I am sure I can find other players that have an interest to push censorship...

I don't want to denounce anyone in particular, because I perfectly know that once the status quo is being replaced, the exact same process will happen with the new thing.

I think the real problem is less the topic that should deserve censorship than the economy behind it (even when no one is actually paid).

If you want to fight censorship, fight the structures that make it "economically" interesting.

What do you do if you are afraid that an AI is going to take your job ? You create fake tasks, that AI are not supposed to be able to do...

So I gave some ideas to spot fake pictures and their limits. Also why, in my opinion, detecting fake pictures is not that critical (you can mislead people with real pictures or because more fake pictures mean that people are just going to adapt to them) and why, in my opinion, dangerous AI or not, we are going to get more censorship anyway: the society seems to want it... The society has a structure that seems to favor it (at least for some individuals. Remember that your freedom is their money or power since censorship has a cost). So i don't really expect to change this trend of the western societies for more and more censorship...

But can we actually use tools to detect fake pictures by ourselves?

-1 You can read this previous article about how to use the header of a file to see how it has been generated (keywords of stable diffusion, headers of Photoshop), but also to use Tineye or Google lens (use the search by image feature) to try to find the source of a picture.

If a picture is fake, it is maybe listed as fake picture. If a picture is real, someone maybe explains where the picture comes from. If the picture matters really, how come it is not listed elsewhere (yet) ?

For the information, Google seems to plan to add a "context" feature for it's image search engine. This feature should be implemented within the next few months. It will feature the ability to detect AI generated pictures.

I don't know yet if non-indexed pictures will be recognized or not... but likely yes...

-2 Find a suitable tool to detect if a picture is fake or not... And this is where it becomes complicated...

I used the websites that i linked above as a reference to get fake pictures.

Here below are all the 17 pictures that website caitfished.com used (around May 10th 2023) and that i saved on my computer before

(I don't own any copyright on this picture above, this picture above has been created by assembling thumbnails of pictures in an HTML file for an educative purpose: illustrate how similar can be AI generated pictures.)

Since this website is offline now, i assembled the pictures that i saved on my computer.

All the pictures of the first line are real pictures. It is possible to find the source of some of them with Google lens.

All the pictures on the 2 other lines are fake (at least from what I can remember, since the source of these pictures is offline - Edit: one more was real, see below). I sorted the pictures by how close they look. I can guess that all these pictures have likely been generated with a very similar prompt (if not the same one). While, very often, i am just not able to guess what individual picture is fake or not. If i sort all the pictures, i can spot that the pictures look too much alike to not suspect that they are fake. Also, you can also notice that the light of these pictures is very similar in comparison with the other pictures.

So one idea to detect AI generated pictures is by using another AI, to detect similar features.

Edit: And here i made a mistake that i am now able to correct because caitfished is online again:

So this technic to sort pictures to detect fake picture is also not perfect and works anyway just for small batches of pictures.

(end of the Edit)

The website https://ai-or-human.github.io/ has a content that is a bit harder to categorize.

(This article is about detecting fake pictures not about detecting "fake" articles).

I took most of the sites that i link here to detect fake pictures from this French video. (At least the links that are actually useful)

So what kind of tools was i able to find to detect fake pictures?:

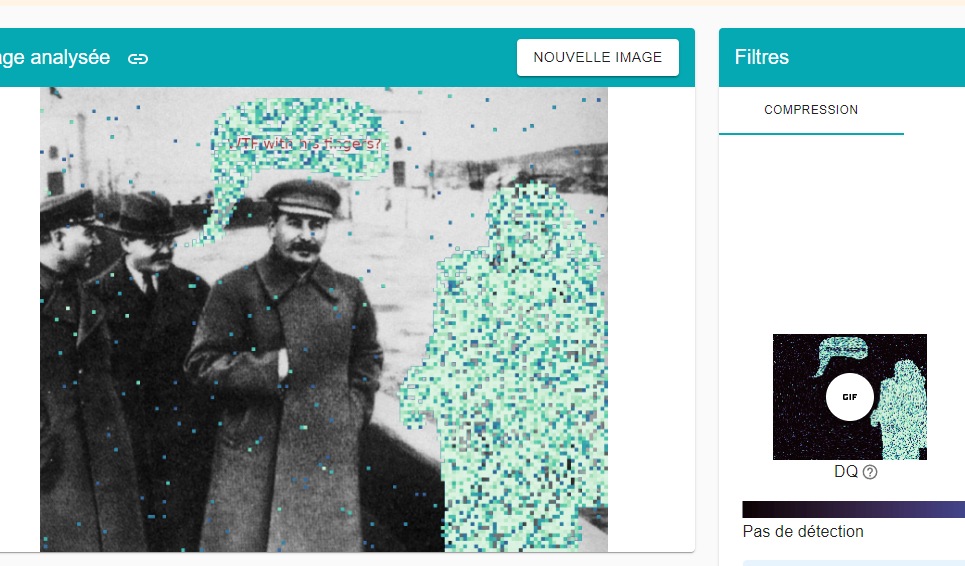

- The Github of AFP-Medialab which seems to offer a set of tools for journalists and fact checkers. They offer this extension for Chrome which is able to detect my own picture as fake.

The problem is that the picture with which I made my own fake has been forged too I and can't detect anything if i use it.

Also if I try to use pictures of caitfished that were purely generated by AI with this website, I can't detect anything.

So the tools works... on some edited pictures, as long as it has been edited with a computer (and not the physical film)...

Also and just for the comment, sadly, and as usual with some people, they always have to behave like the keepers of the flame... (no wait... i said "gatekeeper of the truth", before).

- Another website that seems to detect our fake picture is https://mever.iti.gr/forensics/

Like the plugin before, this tool is able to detect our fake but doesn't detect anything on the pictures of Caitfished.

If you don't want to install the plugin of AFP-Medialab, this website offers a good alternative.

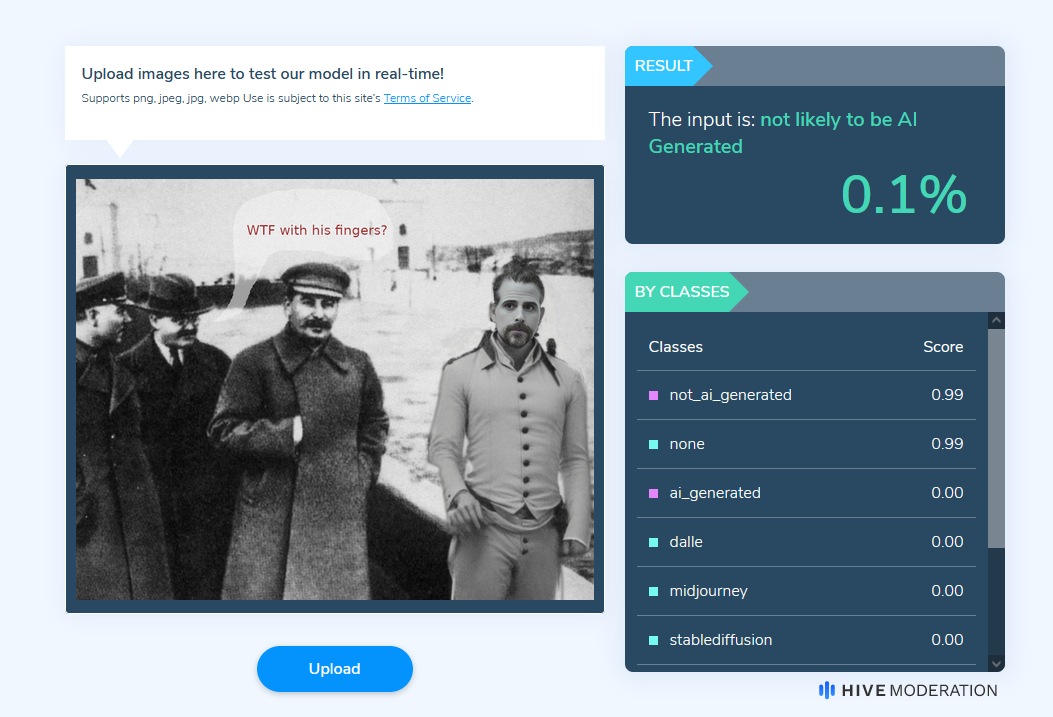

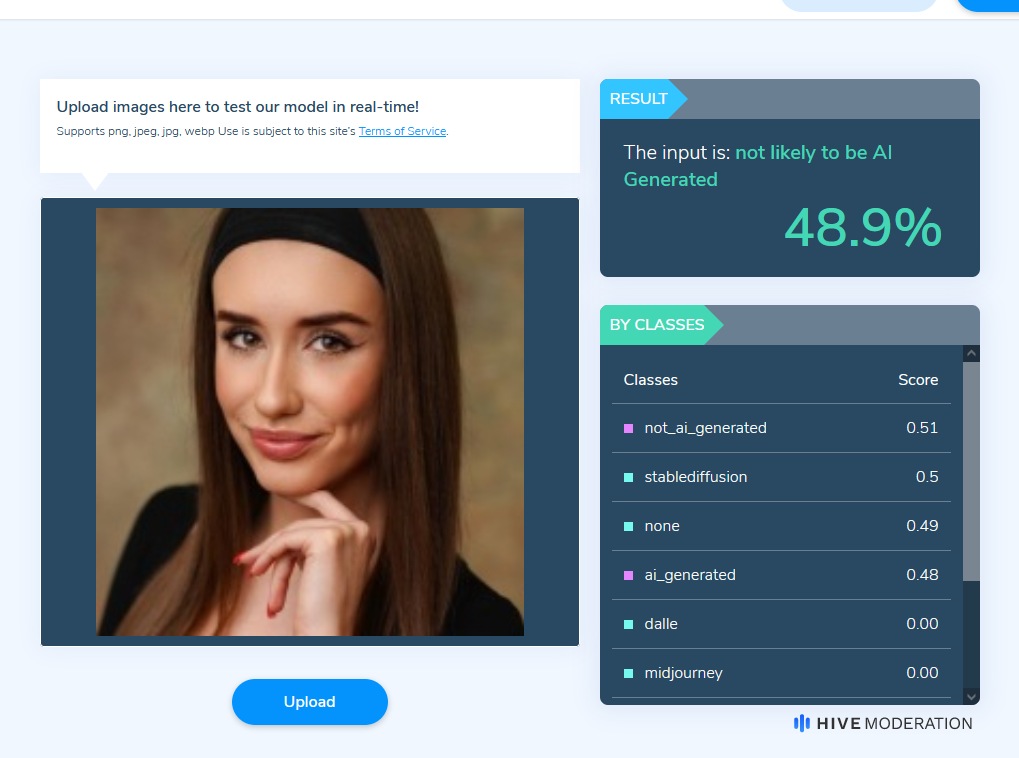

- HiveModeration's website is dedicated to content moderation and offers a very interesting tool to detect AI generated pictures... and

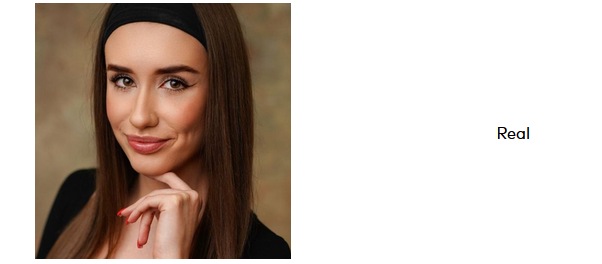

Well, actually the character at the right was AI generated, but the whole picture wasn't. So that's an interesting result.

But what is even more interesting is that this tool is very efficient to recognize the fake pictures of caitfished, even when the picture has been converted to a thumbnail afterwards. I had one false negative result at 48,9% (at least i think it was fake, since i can't double check now, anyway we are close to 50%) but everything else was detected correctly:

Edit: i was wrong:

Anyway Hivemoderation does right now much better than the competitors.

- AI Art Detector: It rarely detects anything correctly. I linked it because they explain what they did.

- DMimageDetection: A github to train you own AI to detect pictures created by diffusion models, with a link to the related publication.

***

- This github lists resources related to deep fake detection: https://github.com/clpeng/Awesome-Face-Forgery-Generation-and-Detection

The problem is that deep fakes are somewhat the previous technology, compared to image generation: You replace a face on a body that looks somewhat alike. So I didn't really went very deep into these links.

Again: You have to use a tool that is suitable for what you want to detect and you don't necessarily know what you want to detect. If I use an infinity of tools, I will have for sure some false positive, so you have to be clever to use a method that is suitable for you.

Here are more "deep fake" links:

fake-face-detection: Another github with "some collected paper and personal notes relevant to Fake Face Detetection"

MRI-GAN: "A Generalized Approach to Detect DeepFakes using Perceptual Image Assessment": Code to detect deep fakes

deepfake-detect an online tool to detect deep fakes: It is not able to detect "diffusion" pictures as fake and it is not able to detect my fake correctly.

I have here 2 links to github directories with some source code. Since it is certainly not the last post on detecting fake pictures, it is possible that I try to use their source code to see what I can actually do with them.