How to install Flash-Attention on a Windows system ?

WanVideoWrapper from Kijai is a useful series of nodes for WanVideo. However it requires you to have Flash-Attention installed in your python venv environment.

So I will focus on how to install this tool, but so it is compatible with "WanVideoWrapper from Kijai".

The date of the tutorial is important: October 11, 2025, since the version of Flash-Attention that you need to use with WanVideoWrapper will likely change.

While you could just do a:

pip install flash-attn --no-build-isolation

(May be it will be enough in the future)

You would notice that it installs: flash-attn - 2.7.0.post2

And if you install WanVideoWrapper, you will then notice that is incompatible with this version:

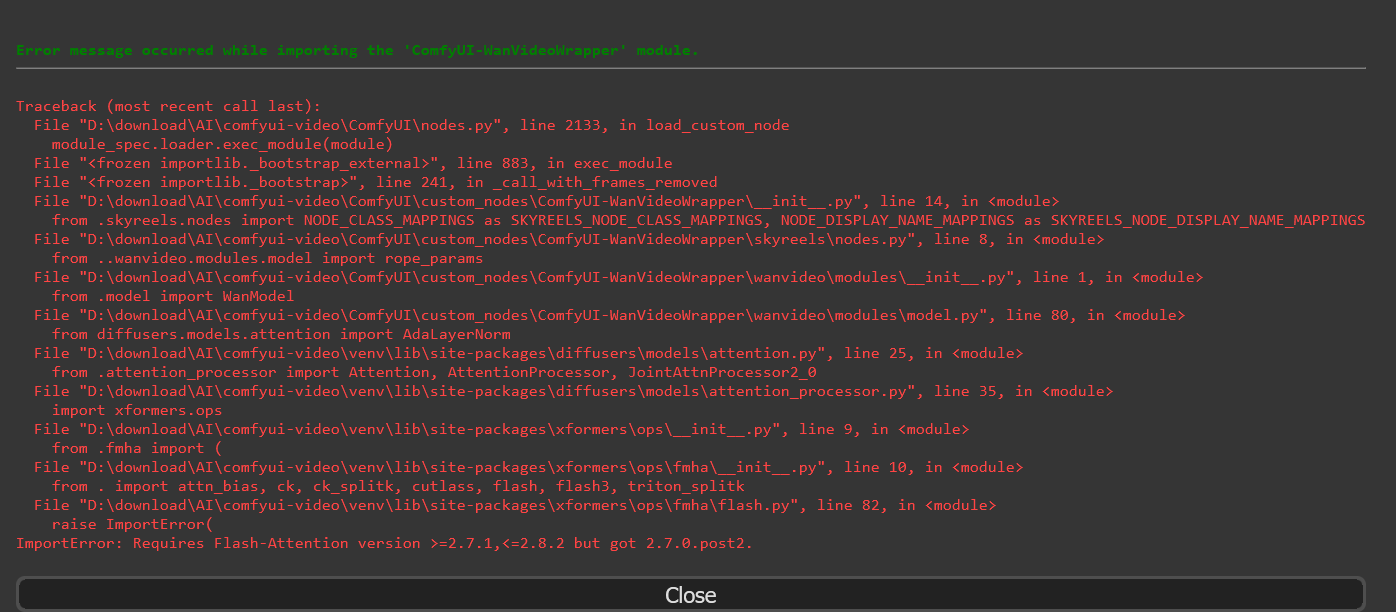

Error message occurred while importing the 'ComfyUI-WanVideoWrapper' module.

...

ImportError: Requires Flash-Attention version >=2.7.1,<=2.8.2 but got 2.7.0.post2.

I don't remember that I had any problem to install Flash-Attention on Ubuntu.

This is may be linked to the fact that the author of Flash-Attention (?) provides Wheel files (.whl) for Linux.

Of course, you could try to find another source of wheel files for windows, to find a compatible version:

https://huggingface.co/lldacing/flash-attention-windows-wheel/tree/main

But after doing a: pip install "flash_attn-2.7.4.post1+cu128torch2.8.0cxx11abiTRUE-cp310-cp310-win_amd64.whl"

I got this error with ComfyUI:

ImportError: DLL load failed while importing flash_attn_2_cuda: The specified procedure could not be found.

So this wasn't as well a working option for me.

This means that I will likely have to compile Flash-Attention from the source.

This involves to go to this page:

https://github.com/Dao-AILab/flash-attention

and notice that the latest version of the software is the version v2.8.3 and that this version is not compatible with WanVideoWrapper (>=2.7.1,<=2.8.2).

This involves also to check the prerequisites to install it.

This is probably useful to try:

(In your comfyui venv environment)

Check if you have ninja:

ninja --version

If not:

pip install ninja

Check if you have packaging:

pip show packaging

If not:

pip install packaging

It also seemed useful to have triton:

pip install triton-windows

for a Windows system.

The github page of FlashAttention recommends: triton==3.2.0, however I am using triton-windows, so I didn't bother to match the version.

From here, I suggest you to read everything before you try any command that I list, because I explain first what didn't work for me and what error i had.

I did then:

git clone https://github.com/Dao-AILab/flash-attention

cd flash-attention

To use a version compatible with WanVideoWrapper:

git checkout -b v2.8.2 v2.8.2

then for my cmd prompt:

set MAX_JOBS=4 && pip install .

power shell equivalent: $env:MAX_JOBS="4"; pip install

Quote: "If your machine has less than 96GB of RAM and lots of CPU cores, ninja might run too many parallel compilation jobs that could exhaust the amount of RAM. To limit the number of parallel compilation jobs, you can set the environment variable MAX_JOBS:"

Hence, this "MAX_JOBS=4"...

After 1 or 2 hours of compilation, it ended with this error:

"File "C:\Users\Miaw\AppData\Local\Programs\Python\Python310\lib\urllib\request.py", line 643, in http_error_default

raise HTTPError(req.full_url, code, msg, hdrs, fp)

urllib.error.HTTPError: HTTP Error 404: Not Found"

So I didn't try again, since I used a method that finally worked:

1 - Download or make sure that you have the MSVC (Microsoft Visual C++) compiler on your system.

I used the version 2022.

You will need cmake and the sdk for your OS (so for me: Windows 10).

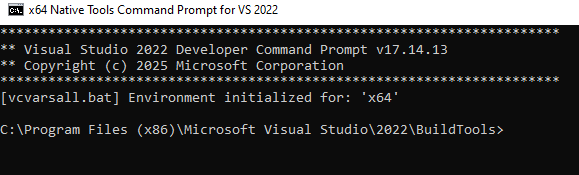

In your start menu of Windows, you should have a "Visual Studio 2022" folder and inside a shortcut to: "x64 Native Tools Command Prompt for VS 2022"

Open this command-line tool, or open the equivalent tool for your own system (x86 for example).

You will have to compile the script in this command-line tool, otherwise, you will get an error like that:

raise RuntimeError(message) from e

RuntimeError: Error compiling objects for extension

2- Download this bat file:

https://huggingface.co/lldacing/flash-attention-windows-wheel/blob/main/WindowsWhlBuilder_cuda.bat

It will help you to do the compilation easier.

This bat file should theoretically be run from the directory of flash-attention, however, if you run this file from a random directory, odds are high that it will return an error message that some file name is too long.

That's why I used a directory with a short path on some external drive to start the compilation.

This current tutorial is btw adapted from this one:

https://huggingface.co/lldacing/flash-attention-windows-wheel

3-

In this "x64 Native Tools Command Prompt for VS 2022" (or equivalent for you), activate your comfyui venv environment.

(PATH)\venv\Scripts\activate.bat

4-

Go to the root of some drive, that is not your system drive (not C:\ )

I did it on an USB drive (drive F:\), so I will explain with this.

cd F:\

F:

(to change the drive)

git clone https://github.com/Dao-AILab/flash-attention

rename the directory "flash-attention" to something short, like "fa".

cd fa

To use a version compatible with WanVideoWrapper:

git checkout -b v2.8.2 v2.8.2

Put the file: WindowsWhlBuilder_cuda.bat that you downloaded before inside this "fa" folder.

Then run it:

WindowsWhlBuilder_cuda.bat

After 4 or 5 hours, my wheel file (.whl) was in F:\fa\dist.

Then install it with a command like that:

pip install "(your wheel file)"

And thanks to this, I was able to run the WanVideoWrapper nodes in ComfyUI.